I recently read two books – Weapons of Math Destruction by Cathy O’Neil and Algorithms of Oppression by Safiya Umoja Noble – that explore how algorithms in commercial software hide harmful biases. The information provided by “machine learning” algorithms is influenced by biases in the training data they’ve used to learn to classify, and the source data they’re drawing upon.

Software and service providers have a hard time perceiving the biases in their products because of severe lack of diversity in the employee base; lack of skills in humanities and ethics to identify the impacts of biases; and advertising business models are motivated on serving eyeballs to advertisers, rather than providing information to customers.

The biases are concealed in proprietary “black box” algorithms; consumers and citizens aren’t able to investigate the rules and the training data that lead to biased outcomes. The biases in the algorithms are the more pernicious because ordinary people, including professionals, tend to place misplaced faith in output of computers as “scientific” and fact-based.

Weapons of Math Destruction covers a broad range of systems implementing services ranging from credit scoring and insurance, to job applications and college admissions, to criminal justice; along with advertising and information.

In the olden days of non-automated lending discrimination, for example, bank managers would directly weed out black and brown applicants. Now, an algorithm might factor in the economic stability of the neighbors and relatives of an applicant, holding back creditworthy individuals who, like many in communities of color, live in poorer neighborhoods and came from low-income families.

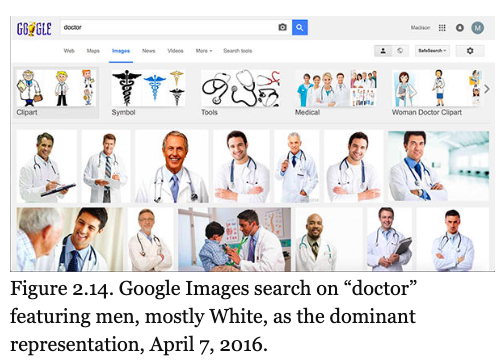

Algorithms of Oppression focuses most strongly on advertising based algorithms, and Google search in particular; and uses a black feminist lens focusing on harms to women of color, as well as other historically marginalized communities. Representations are systematically biased. One of many examples in the book is this Google image search which shows white men as images of doctors.

Another is the experience of an African-American hair salon owner who finds Yelp to be even less helpful than a typical small business owner. Removing images from the Yelp profile took away a marketing advantage that clearly showed a Black-owned business. The salon-owner reported that her customers hesitated to use a “check-in” feature due to culturally justified skepticism of features that could be used for surveillance.

The risks covered in the books are important issues for 21st century civic life and policy, and I recommend both to non-experts. Algorithms of Oppression was somewhat frustrating; its important ideas would be better communicated with the assistance of a culturally-informed editor. The author consistently uses the term “neoliberal” with more and less precision; including in references to classical 18th century old liberalism. The author treats history in very broad and sometimes misleading generalities. For example, she refers to the “public-interest journalism environment prior to the 1990s”, without nod to, for example, the racialized narratives of crime that shaped mass-market journalism from the very beginning.

The remedies that the books outline are suggestive, at the very beginning of initiatives to increase accountability and provide alternatives. Weapons of Math Destruction starts with recommendations for codes of professional ethics among designers of algorithmic models and machine learning systems. WMD recommends regular auditing among algorithmic systems, with academic support and regulatory requirements, including access to the public.

Algorithms of Oppression is more skeptical of a model based on regulation of private sector services, finding commercial motives to be irredeemably untrustworthy. Noble proposes publicly funded search systems that would be free from advertising business model bias and would provide more contextualized information designed to address the needs of communities, including traditionally oppressed communities.

A world where the public sector supports information discovery systems that provide the ease of use of commercial software is far from today’s environment where public sector tools tend to be unsophisticated and clunky; and where public funding is exploited by contractors whose core competency is billing public agencies.. Providing search services that are scalable to vast amounts of information; can be used by billions of ordinary people, unlike the pre-google tools used by a small number of expert professionals; and can provide contextualized information is a very difficult thing to do.

The focus on information serving communities rather than individuals has substantial risks the book doesn’t cover. Information services that serve religiously conservative communities can be customized to conceal information about religious diversity, LGBTQ information, evolution, and so on. Mainstream US residents would agree that the top search results for “black girls” shouldn’t be porn (which the author discovered with Google search in 2012 and had been largely fixed by 2016); but a religious community might also object to images that show exposed hair or naked elbows. Decisions about what information should be shown and suppressed in a culturally sensitive manner are more complicated and fraught than the book addresses.

Also, public search systems may not be a panacea in a world where government information systems carry differently terrible risks of surveillance and political oppression; consider China’s social credit system.

These books are just starting to scratch the surface in critiquing algorithmically biased systems, and in proposing remedies. These are important issues, and it’s good time to engage in critique and start to consider solutions.